Zero Downtime Deployment of Episerver

8 minuter

Översättning saknas

Target audience

This post is primarily aimed towards technical staff such as developers, DevOps engineers, and architects - or anyone else enjoying the inner workings of zero downtime deployments. The business perspectives of continuous delivery will be addressed in an upcoming customer case presentation.

Advantages of Zero Downtime Deployment

By implementing this deployment process we are able to:

- Deploy new or improved features without affecting site visitors

- Manage related new content to be published as part of a release

- Embrace continuous delivery including testing with up-to-date production content

- Perform instantaneous rollback

At a glance

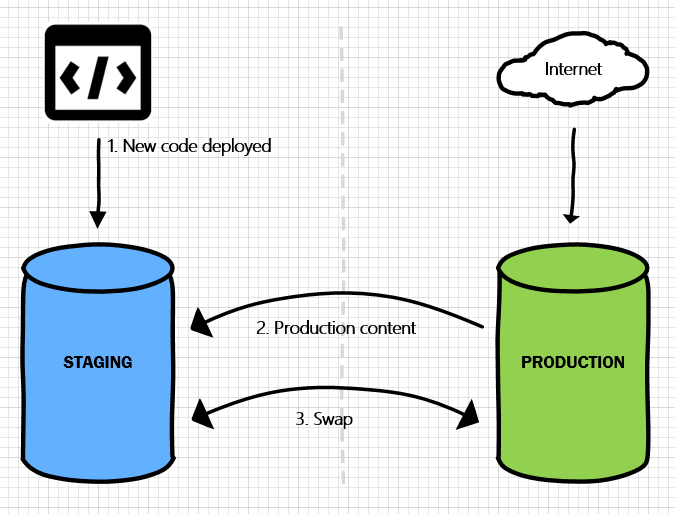

The idea is having two virtually identical environments. They are named Blue and Green, respectively, inspired by a deployment process described by Martin Fowler.

At any given time, one is publicly available (the production environment) and one is acting as a private staging environment.

When new code is committed it is built and deployed to the staging environment. If the deployment succeeds, production content (database, blob storage, etc) is copied from the production environment to the staging environment.

At this time we can test, and optionally carry out content work, in the staging environment. When ready, we simply swap the two environments. The staging environment then becomes the production environment, and vice versa:

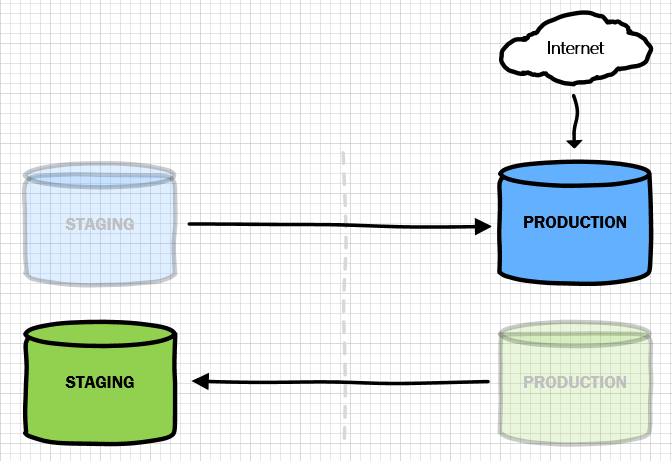

To further clarify, after the swap the Blue environment is the production environment, and the Green one is acting as the staging environment for the next deployment:

Noteworthy here is that the swap will be 100 % transparent to site visitors. Since the staging environment can be fully warmed up before the swap (for example to populate output and data caches) there will be zero performance impact when the swap occurs. A site visitor continuously reloading its web browser will simply, all of a sudden, see new content or features.

Fully automating the deployment process

To fully adopt continuous delivery, the build and deployment processes need to be automated, for example using a build server such as TeamCity and a deployment server such as Octopus Deploy.

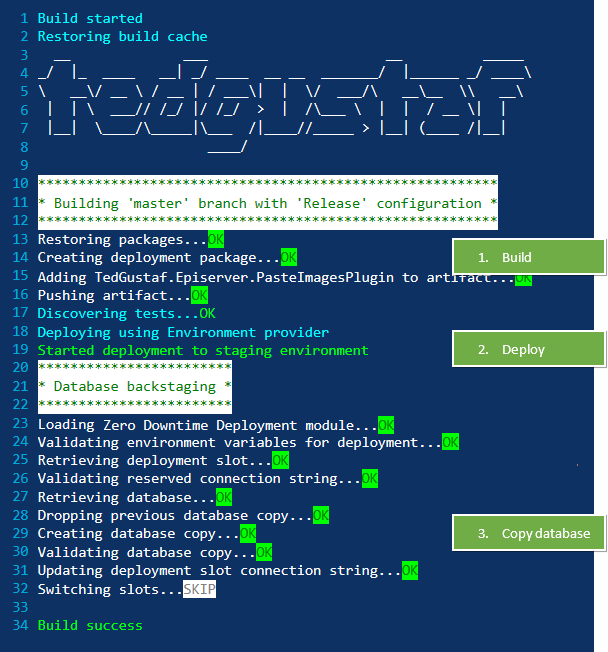

In our case, we commonly use AppVeyor, which acts as both build and deployment server. Like with TeamCity and Octopus Deploy, the build and deployment processes can be customized using PowerShell scripts.

As an example, this is the output from AppVeyor when code is committed to the master branch of our Episerver website:

Tweaking deployment through commit messages

If you look closely at line 32 of the AppVeyor output you will notice it says SKIP, indicating the slots will not be automatically swapped after the deployment. This is to allow us to test and validate the deployment in the staging environment before go-live.

If we want to deploy a hotfix, where we do not want or need to validate the deployment in the staging environment before go-live, we can include the text [autoswap] in our commit message. This will deploy to the staging environment, warm it up, and then swap automatically.

Similarly, if we want to deploy to the staging environment without backstaging content, we can embed [skipcontent] in the commit message to tell our deployment script to simply ignore copying content from the production environment.

Episerver database schema updates

As part of Episerver's continuous delivery process, we regularly receive software updates through their NuGet feed. These may include schema updates that are applied to the database when the application starts.

In our case, these updates will be applied to the staging database, which at that point is an exact copy of the production database. This means we can properly validate the update before it goes live.

Noting a few caveats

There are a few things to take into consideration when introducing this process. For one, from the time content is copied from the production environment to the time of the swap, you effectively cannot publish content to the production environment as the content would be overridden by the swap.

On a similar note, if you are operating an e-commerce website or similar, you will need to ensure no data risks being lost during the time between the content copy from the production environment and the swap.

Also, if your website is stateful you will need to decide how to manage it - potential consequences could otherwise involve things such as users being logged out after the swap.

Want zero downtime deployments for your website?

We have developed an extensive toolset for enabling zero downtime deployments in both on-premise and cloud-based hosting environments. If you are a client of ours, chances are we are already in the process of moving to automated continuous delivery. If not, feel free to drop me an e-mail at ted@tedgustaf.com.